We explore everything about oriented bounding boxes — advantages, model architectures, tools, challenges, and more.

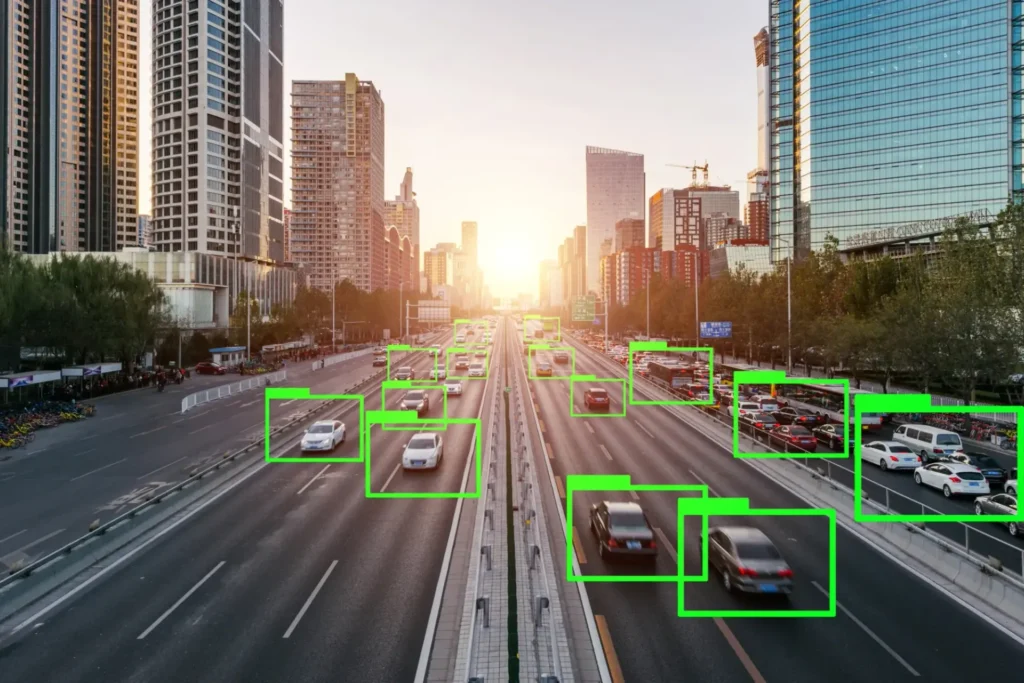

Difference Between Object Prediction with Regular Bounding Boxes vs Oriented Bounding Boxes

In building Computer Vision systems, the precision of object detection models largely depends on the quality of data annotation. While traditional axis-aligned bounding boxes have been the standard for years, oriented bounding boxes (OBBs) are increasingly gaining attention due to their ability to represent object orientation with far greater accuracy.

In this article, we’ll dive into what oriented bounding box annotation is, explore its benefits compared to regular bounding boxes, examine machine learning models designed to predict OBBs, and highlight how Coral Mountain enables efficient, scalable OBB annotation through advanced tools and workflows.

What is an Oriented Bounding Box?

An Oriented Bounding Box (OBB) is a bounding box that can rotate to align with the orientation of an object. Unlike axis-aligned boxes that remain fixed to horizontal and vertical axes, an OBB rotates to match the object’s angle, offering a tighter, more accurate fit.

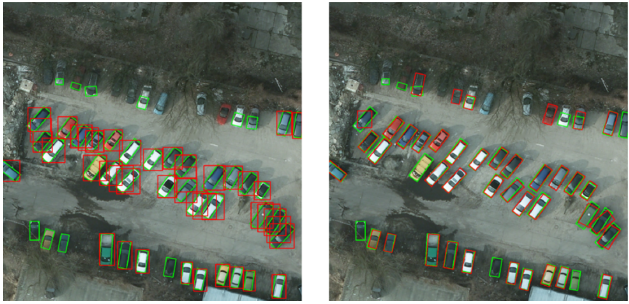

This type of annotation is essential for cases where objects are not perfectly aligned—such as satellite imagery, aerial photos, or industrial inspections—where items appear in different rotations. Using standard boxes in such cases can lead to overlaps, misalignments, and excess background noise.

Key characteristics of oriented bounding boxes include:

- Rotational Fit: Adjusts to match an object’s true orientation for improved alignment.

- Five Defining Parameters: Center coordinates (x, y), width, height, and rotation angle (θ).

- Enhanced Precision: Ideal for elongated, diagonal, or irregularly shaped objects.

Differences Between Axis-Aligned Bounding Boxes and Oriented Bounding Boxes

Oriented bounding boxes bring a new level of precision to image annotation. Here are their main advantages:

- Improved Precision: OBBs fit tightly around objects regardless of orientation, reducing background interference and annotation errors.

- Reduced Overlap: In dense scenes, OBBs minimize overlap, resulting in cleaner and more distinct boundaries.

- Better for Irregular Shapes: Especially beneficial for detecting tilted vehicles, rotated text, or diagonal structures in aerial images.

- Orientation Awareness: OBBs provide explicit information about an object’s angle—vital for object tracking, motion prediction, and scene understanding.

In short, OBBs deliver richer spatial information that helps machine learning models achieve higher detection accuracy in real-world scenarios.

Robustness in Object Detection

Oriented bounding boxes significantly enhance object detection in complex visual conditions:

- Rotated Objects: Perfect for use cases like aerial surveillance, robotics, and autonomous driving, where objects appear at various angles.

- Varied Object Poses: Handle dynamic, non-uniform orientations effectively, resulting in more robust and generalizable detection systems.

Machine Learning Models for Oriented Bounding Box Detection

Several advanced deep learning models have been developed to detect oriented bounding boxes, improving precision and orientation awareness. Key models include:

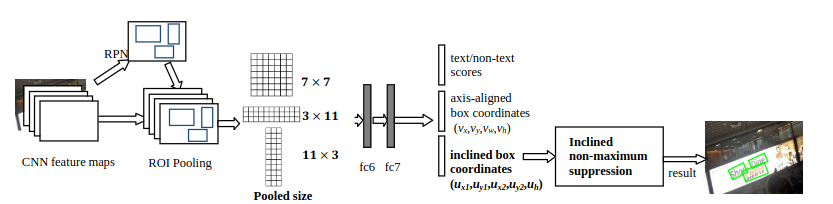

- Rotated Region-based Convolutional Neural Network (R2CNN)

An extension of Faster R-CNN that introduces a Rotated Region Proposal Network (R-RPN) and Rotated RoI Pooling layer. It achieves high accuracy for rotated objects, making it useful for text detection and aerial imagery analysis. - Oriented Bounding Box Regression (OBBR)

Uses a regression-based approach to predict center coordinates, width, height, and rotation angle directly. Compatible with popular backbones like ResNet and VGG, OBBR offers flexibility and adaptability for various computer vision tasks. - YOLO-v5 (Rotated Version)

An enhanced version of the YOLO architecture modified to handle rotated objects. It combines YOLO’s speed with orientation awareness—ideal for real-time applications such as drone vision or traffic monitoring. - Rotated Single Shot Multibox Detector (R-SSD)

A variant of SSD that adds rotation parameters while maintaining fast inference speeds. It’s a reliable option for real-time object detection when accuracy and efficiency must coexist.

Calculating Oriented Bounding Box IoU

Intersection over Union (IoU) measures how accurately a predicted bounding box matches the ground truth. While IoU is straightforward for axis-aligned boxes, calculating it for rotated OBBs is more complex.

To compute OBB IoU:

- Determine the rotated coordinates of each bounding box.

- Treat both boxes as polygons.

- Compute intersection and union areas using geometry libraries (e.g., Shapely in Python).

- Apply the standard IoU formula

This approach ensures consistent evaluation for models trained on oriented object detection tasks.

Technical Considerations and Challenges

- Annotation Complexity

Manually annotating OBBs is more intricate than regular boxes. It requires tools that support angle rotation, precise alignment, and fine-tuning. For large datasets, automation or semi-automated annotation pipelines become essential. - Model Training

Selecting a model capable of handling rotation parameters is crucial. Training should include data augmentation techniques such as random rotations and perspective transformations to improve robustness. - Inference Speed

Predicting rotated boxes adds computational complexity, potentially affecting real-time performance. Efficient architectures and optimization are key to maintaining speed without compromising accuracy.

Oriented Bounding Box Annotation Tools

Annotating OBBs requires specialized software capable of handling rotations, precise adjustments, and collaborative workflows. At a minimum, such tools should support:

- Creation and rotation of bounding boxes

- Large-scale dataset management

- Quality control and revision workflows

- Detailed annotation tracking and reporting

Leading platforms that support OBB annotation include:

Encord, SuperAnnotate, Dataloop, and LabelStudio.

At Coral Mountain, our annotation platform is built to streamline OBB creation through intuitive and powerful tools:

Two-Point Method

Create a standard bounding box using two corner points, then rotate it with an interactive pivot icon. Holding “Shift” enables rotation in 5° increments for precision control.

Extreme Points Method

Click the four extreme ends of the object to automatically generate an oriented bounding box. This approach significantly speeds up the labeling process and ensures a tighter fit.

Conclusion

Oriented bounding boxes represent a significant leap in computer vision annotation, offering superior accuracy, tighter fits, and detailed orientation data compared to traditional bounding boxes. While they introduce challenges—like increased annotation complexity and slower inference—they unlock far greater potential for applications in autonomous systems, aerial mapping, industrial inspection, and robotics.

Coral Mountain Data is a data annotation and data collection company that provides high-quality data annotation services for Artificial Intelligence (AI) and Machine Learning (ML) models, ensuring reliable input datasets. Our annotation solutions include LiDAR point cloud data, enhancing the performance of AI and ML models. Coral Mountain Data provide high-quality data about coral reefs including sounds of coral reefs, marine life, waves….

Recommended for you

- News

Most remote work today faces the same underlying economic pressure: commoditization driven by automation and global...

- News

Explore how AVs learn to see: Key labeling techniques, QA workflows, and tools that ensure safe...

- News

How multi-annotator validation improves label accuracy, reduces bias, and helps build reliable AI training datasets at...

Coral Mountain Data

Office

- Group 3, Cua Lap, Duong To, Phu Quoc, Kien Giang, Vietnam

- (+84) 39 652 6078

- info@coralmountaindata.com

Data Factory

- An Thoi, Phu Quoc, Vietnam

- Vung Bau, Phu Quoc, Vietnam

Copyright © 2024 Coral Mountain Data. All rights reserved.