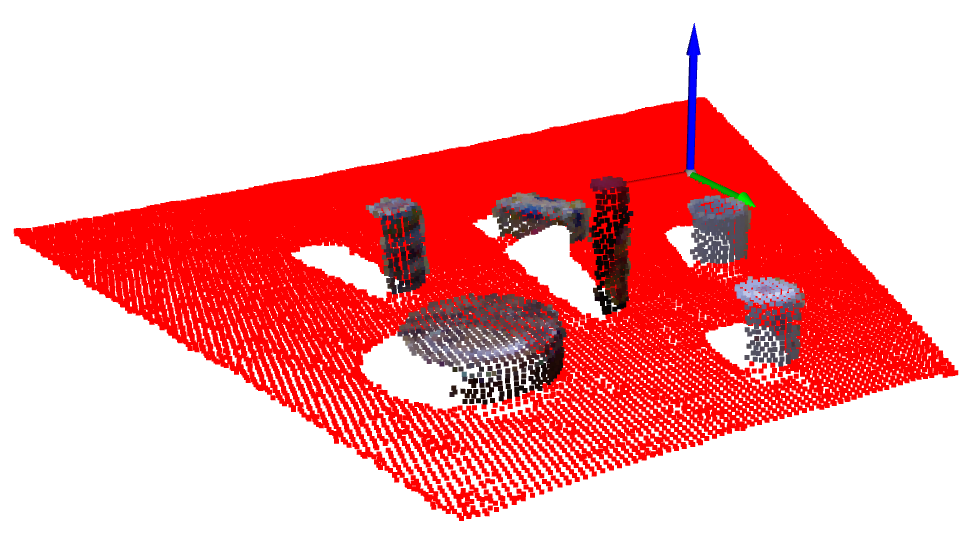

Explore the world of object detection from point clouds. Learn about techniques, challenges, and real-world applications.

Introduction

Object detection from point clouds is transforming how machines perceive and process 3D environments. This article presents the fundamental concepts, methodologies, and future trends in this technology.

What is a Point Cloud?

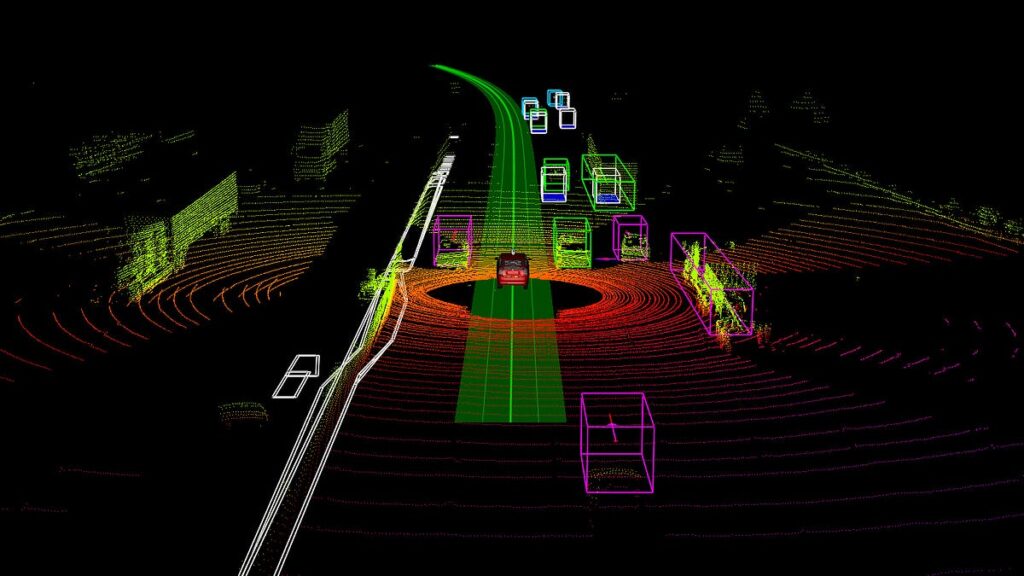

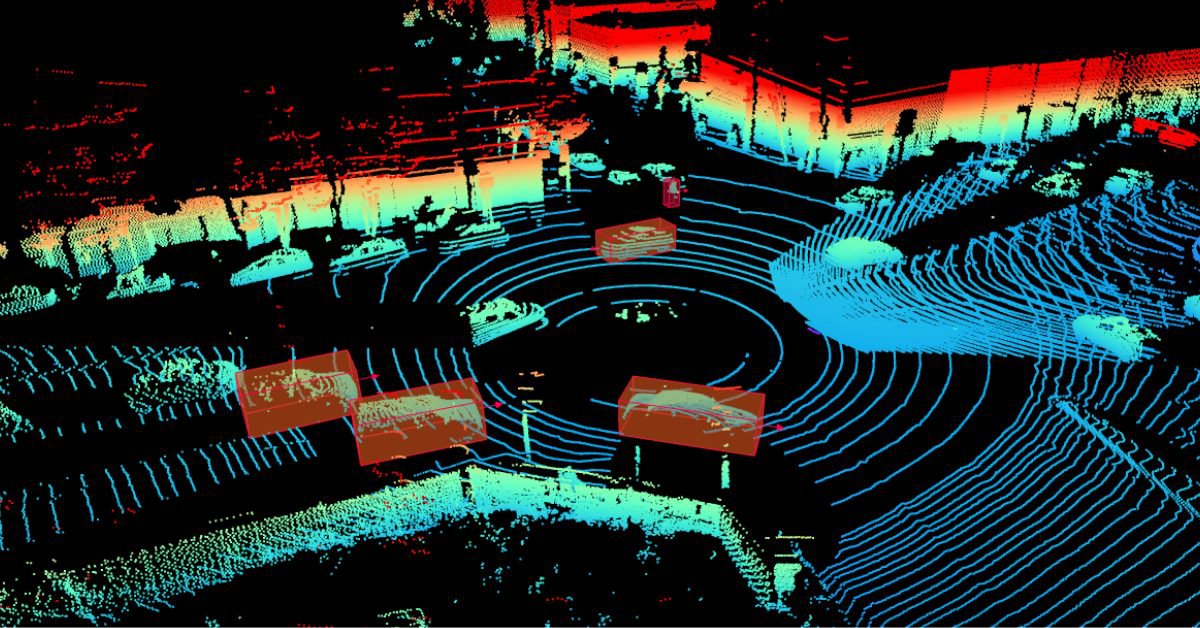

A point cloud is a collection of data points in three-dimensional space, representing the surface and shape of objects. This data is typically collected through technologies such as LiDAR (Light Detection and Ranging), which provides valuable spatial information about an object’s shape and position.

Why is Object Detection from Point Clouds Important?

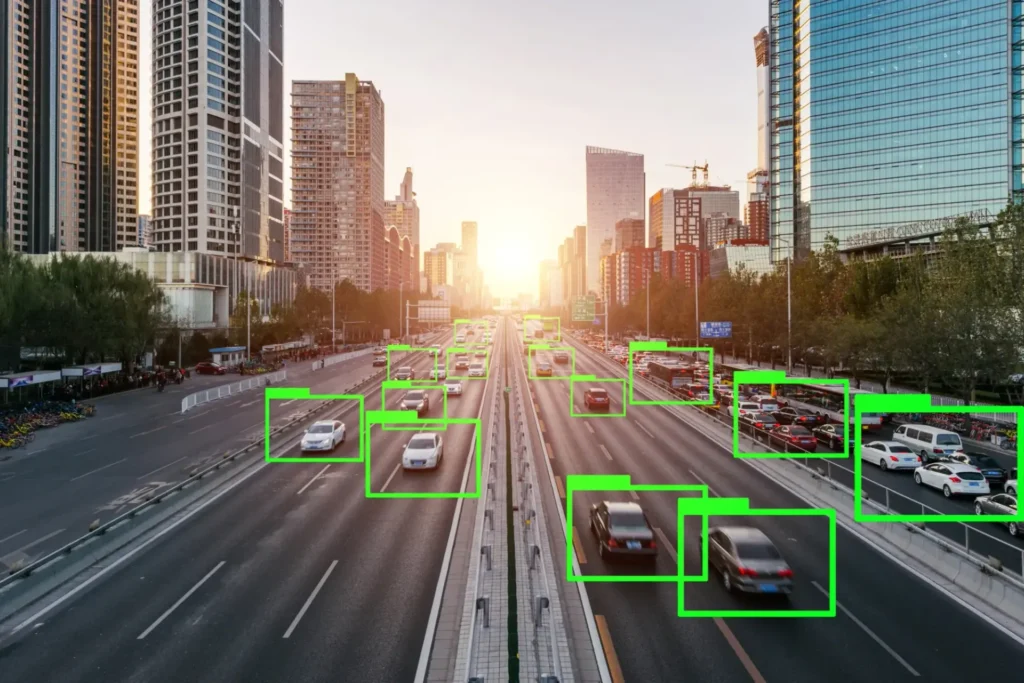

The ability to accurately detect objects in point clouds is critical for applications requiring spatial awareness, such as:

-

Autonomous vehicles: support navigation and obstacle avoidance.

-

Robotics: enable manipulation of objects in dynamic environments.

-

Augmented reality (AR): enhance depth perception for immersive experiences.

-

Computer vision: provide a more complete understanding of 3D environments.

Point Cloud Acquisition Methods

Some common devices for collecting point clouds include:

-

LiDAR sensors: high-precision, long-range, and dense point clouds, ideal for object recognition.

-

Depth cameras: capture depth information, suitable for indoor mapping.

-

Stereo vision: use two cameras to estimate depth, efficient for mobile and AR devices.

Challenges and Limitations of Point Cloud Data

Challenges with point cloud data include:

-

Sparsity: irregular, non-continuous data.

-

Noise: inaccurate points complicate detection.

-

High computational requirements: especially for real-time applications.

Strong detection algorithms are required to address these complexities and ensure accuracy.

Feature Computation from Point Clouds

Point Descriptors

-

FPFH (Fast Point Feature Histogram): an optimized, lightweight version of PFH that computes geometric relationships between neighboring points for quick surface feature extraction. Ideal for real-time applications.

-

PFH (Point Feature Histogram): a powerful 3D descriptor encoding spatial relationships between each point and its neighbors. While accurate, it is computationally intensive.

Feature Extraction Techniques

-

Max pooling: selects the maximum value within a feature group, reducing dimensionality while retaining critical information.

-

Multi-patch extraction: divides the point cloud into overlapping regions and extracts features separately, better capturing complex geometries.

Object Detection Methods in Point Clouds

Traditional Approaches

-

RANSAC (Random Sample Consensus): an iterative method for identifying planes or cylinders by fitting optimal geometric models while filtering out outliers. Effective in noisy environments.

-

Hough Transform: converts data into parameter space to detect shapes (lines, circles, etc.). Effective in structured scenes but computationally expensive.

Deep Learning Approaches

-

PointNet: directly processes unordered point sets, pioneering deep learning for point clouds.

-

VoxelNet: converts point clouds into voxels and applies 3D CNNs for detection.

-

PointRCNN: adapts the R-CNN architecture for 3D, achieving high accuracy in object localization.

Comparison and Evaluation

Evaluation criteria include:

-

Accuracy

-

Computational complexity

-

Robustness to noise

For example, PointRCNN achieves high accuracy but requires more computational resources than PointNet.

Challenges and Considerations

-

Noise and outliers: preprocessing (filtering and normalization) improves data quality.

-

Occlusion and clutter: overlapping objects complicate separation; multi-view fusion helps mitigate this.

-

Computational complexity: large datasets demand efficient algorithms. Down-sampling and data compression can help reduce computational load.

Performance Metrics

-

IoU (Intersection over Union): measures overlap between predicted and ground-truth objects.

-

Precision: percentage of correct detections among predictions.

-

Recall: percentage of actual objects correctly detected.

Future Trends and Research Directions

-

New deep learning architectures: improved performance, optimized for resource-limited devices such as drones.

-

Real-time processing: reducing latency for critical applications like autonomous driving.

-

Multi-modal fusion: combining point cloud data with image data for enhanced spatial perception.

Coral Mountain Data

Coral Mountain Data provides high-quality data annotation services for AI and machine learning models. Our solutions include processing and labeling point cloud datasets captured by LiDAR, ensuring superior training data quality that significantly enhances model performance.

Recommended for you

- News

Explore how AVs learn to see: Key labeling techniques, QA workflows, and tools that ensure safe...

- News

How multi-annotator validation improves label accuracy, reduces bias, and helps build reliable AI training datasets at...

- News

Discover the world of point cloud object detection. Learn about techniques, challenges, and real-world applications. Introduction...

Coral Mountain Data

Office

- Group 3, Cua Lap, Duong To, Phu Quoc, Kien Giang, Vietnam

- (+84) 39 652 6078

- info@coralmountaindata.com

Data Factory

- An Thoi, Phu Quoc, Vietnam

- Vung Bau, Phu Quoc, Vietnam

Copyright © 2024 Coral Mountain Data. All rights reserved.