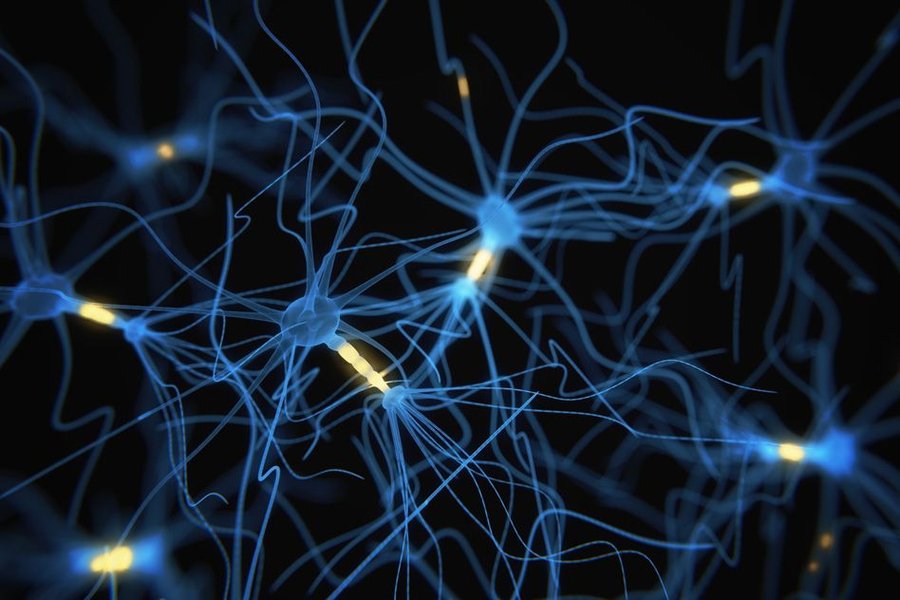

Spiking Neural Networks (SNNs) represent a new generation of neural networks designed to better imitate how biological neurons communicate and process information. Let’s take a closer look at what makes them unique.

Neural Connections

Neural connections inside the human brain

Most of today’s neural networks—sometimes called the second generation—have enabled massive breakthroughs across AI fields. Yet, from a biological perspective, they remain only loosely inspired by the brain. Deep Neural Networks (DNNs) borrow their layered architecture from neuroscience models of the cortex, but at the functional level, there are only shallow similarities between real neurons and Artificial Neural Networks (ANNs).

One striking difference lies in how they operate: artificial neurons in ANNs are continuous, clock-driven function approximators, while biological neurons transmit asynchronous spikes—brief, precise electrical signals that mark the occurrence of important events.

What are Spiking Neural Networks?

SNNs, sometimes described as the third generation of neural networks, aim to bridge this gap. They rely on biologically plausible neuron models to process information in ways closer to real neural circuits. This gives them several potential advantages: event-driven computation, reduced power requirements, extremely fast responses, online learning capabilities, and natural parallelism.

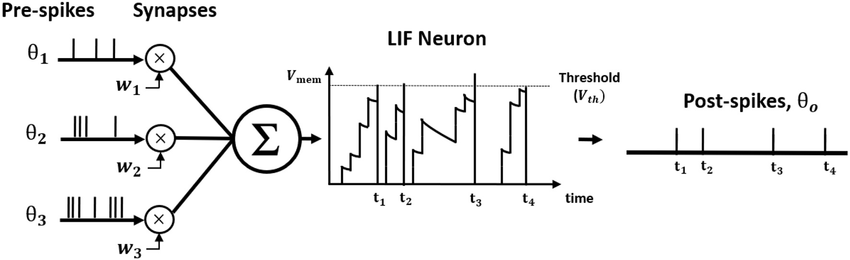

Unlike ANNs, which use continuous values, SNNs communicate via spikes—discrete events in time. Whether or not a neuron spikes depends on dynamic equations modeling biological processes, most importantly the membrane potential. When this potential exceeds a threshold, the neuron fires, then resets.

The Leaky Integrate-and-Fire (LIF) Model

The Leaky Integrate-and-Fire (LIF) model is the most widely used abstraction for simulating spiking neurons. It describes how neurons collect input spikes through dendrites, gradually increasing their potential. Once the threshold is crossed, the neuron fires a spike, transmitting information.

In this system, information is encoded not just in the presence of spikes but also in their frequency, timing, and patterns. Even with sparse spikes, a surprising amount of information can be represented—potentially even binary codes, based on whether a spike occurs or not at a given moment.

Although moving from continuous outputs (ANNs) to discrete spike trains might seem like a limitation, spike-based communication provides a natural way to handle spatio-temporal data, such as sensory inputs. Spatially, neurons interact mostly with their neighbors, echoing convolutional networks. Temporally, spike sequences encode information over time, giving SNNs the ability to process sequential data without the complexities of recurrent networks. Research shows that spiking neurons are, in fact, more computationally expressive than traditional ANN neurons.

Advantages of SNNs over ANNs

Energy efficiency

SNNs only transmit data when spikes occur, unlike ANNs that perform constant calculations. This can dramatically reduce energy usage, an important benefit in the age of large-scale AI models.

Real-time processing

Spikes are processed as they arrive. For example, unlike conventional speech recognition systems that process entire chunks of audio, SNNs can handle speech streams continuously, closer to how the brain perceives sounds.

Dynamic adaptability

Thanks to temporal coding, SNNs can adapt to changing inputs in real time, potentially making them more resilient than traditional neural networks.

Why aren’t SNNs mainstream yet?

The biggest roadblock is training. While unsupervised learning mechanisms inspired by biology (like Hebbian learning and Spike-Timing-Dependent Plasticity, or STDP) exist, there is still no robust supervised training method that surpasses conventional deep learning. Because spikes are discrete and non-differentiable, gradient-based methods don’t work without compromising temporal precision.

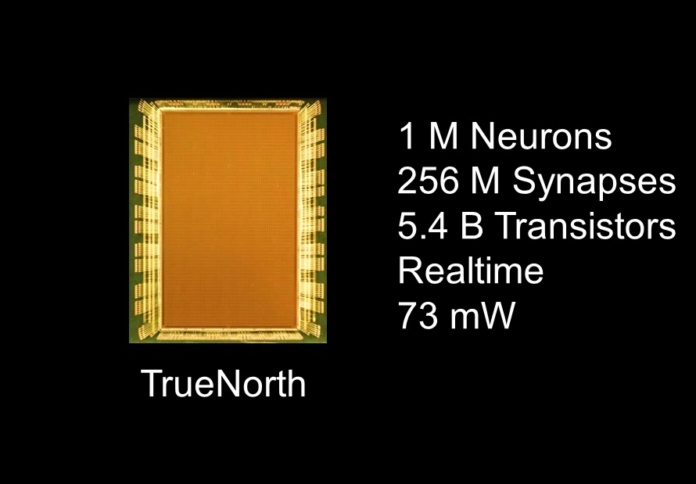

Another limitation is hardware: simulating spiking behavior with standard computers is computationally heavy, as it requires solving differential equations. However, neuromorphic chips such as IBM’s TrueNorth are being developed to efficiently emulate spike-driven behavior, paving the way for practical applications.

Final Thoughts

Spiking Neural Networks may not yet be widely applied, but they are a fascinating frontier in AI research. By modeling the brain more faithfully, SNNs not only hold potential for efficient, real-time computation but also offer insights into how biological intelligence actually works. Given that the human brain remains the most powerful computing system we know, uncovering its principles could redefine the future of Artificial Intelligence.

Coral Mountain Data is a data annotation and data collection company that provides high-quality data annotation services for Artificial Intelligence (AI) and Machine Learning (ML) models, ensuring reliable input datasets. Our annotation solutions include LiDAR point cloud data, enhancing the performance of AI and ML models. Coral Mountain Data provide high-quality data about coral reefs including sounds of coral reefs, marine life, waves….

Recommended for you

- News

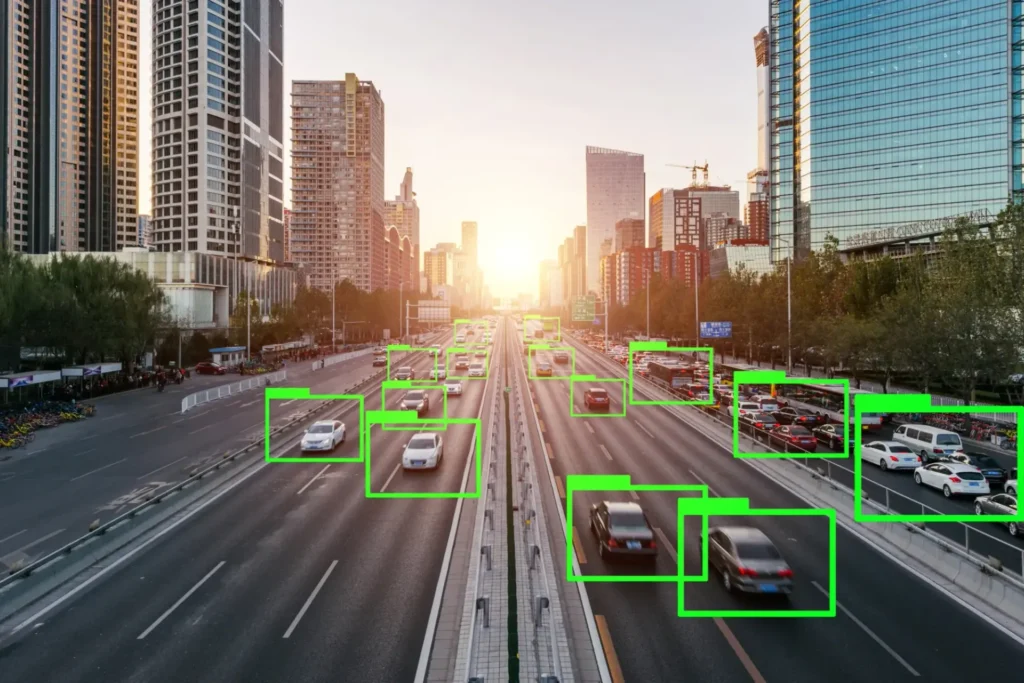

Explore how AVs learn to see: Key labeling techniques, QA workflows, and tools that ensure safe...

- News

How multi-annotator validation improves label accuracy, reduces bias, and helps build reliable AI training datasets at...

- News

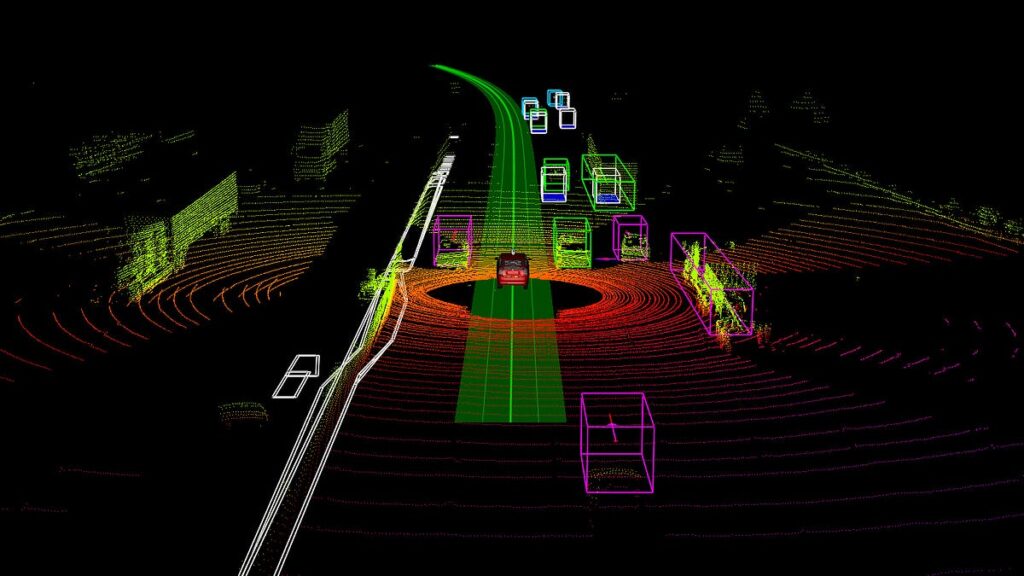

Discover the world of point cloud object detection. Learn about techniques, challenges, and real-world applications. Introduction...

Coral Mountain Data

Office

- Group 3, Cua Lap, Duong To, Phu Quoc, Kien Giang, Vietnam

- (+84) 39 652 6078

- info@coralmountaindata.com

Data Factory

- An Thoi, Phu Quoc, Vietnam

- Vung Bau, Phu Quoc, Vietnam

Copyright © 2024 Coral Mountain Data. All rights reserved.