A look at the ethical concerns surrounding the unsung heroes of AI – data annotators – and ways to safeguard their well-being.

High-quality annotated data is key to AI

As Artificial Intelligence (AI) continues to influence nearly every aspect of daily life, the demand for high-quality data has never been greater. Data annotation – the process of labeling data to train Machine Learning (ML) models – is the cornerstone of any AI pipeline. However, this work is often outsourced to human annotators, raising serious ethical questions about their rights, working conditions, and mental health.

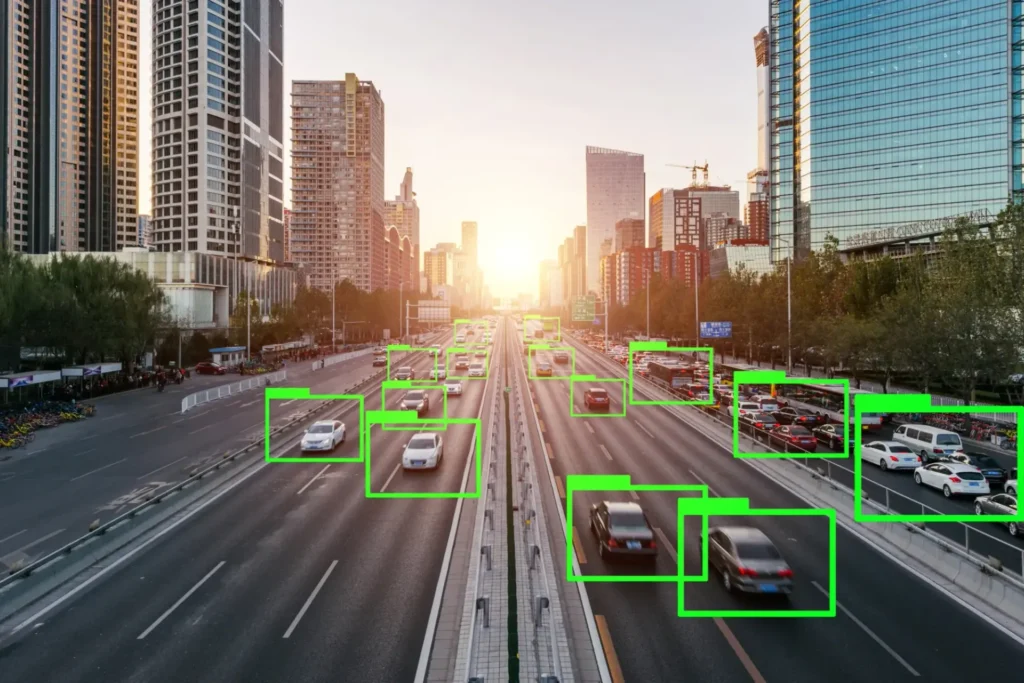

The quality of annotated data directly determines how well an ML model performs. It defines what an AI system can and cannot learn. For instance, in areas like object detection or language translation, even minor inconsistencies in annotations can distort model training and lead to unreliable predictions.

In high-stakes scenarios such as autonomous driving, mislabeled pedestrians or vehicles can result in life-threatening consequences. Therefore, maintaining consistent, accurate annotations across massive datasets is essential to ensuring robust, real-world AI performance.

Rise of large-scale Data Annotation efforts

With AI’s rapid expansion across industries, the need for large and diverse annotated datasets has grown exponentially. From generative AI to computer vision and autonomous systems, the volume of data required to train dependable models keeps increasing.

To ensure diversity and fairness, datasets now include information from different regions, cultures, and demographics. This scale demands enormous labor, leading many companies to outsource annotation tasks to global workforces, use crowdsourcing platforms, or adopt hybrid approaches that combine human annotators with automated tools.

Coral Mountain, for example, develops scalable annotation workflows that integrate human expertise with semi-automated systems, helping AI teams maintain both quality and efficiency in data labeling.

Poor working conditions for data annotators

Despite their critical role, data annotators often face harsh realities. Long hours in front of screens, repetitive labeling tasks, and tight deadlines create physical strain and mental exhaustion. Many annotators work under contracts with minimal flexibility, inadequate pay, and limited job security – especially in regions where labor is cheap.

In some outsourcing hubs, annotators report working with outdated equipment, under strict quotas, and with little to no rest. Such conditions not only harm worker well-being but also reduce the overall quality of labeled data, undermining the integrity of AI systems.

Psychological toll from sensitive or explicit content

Beyond the physical strain, annotators frequently confront emotionally distressing material – from violent imagery to explicit content – particularly in projects related to content moderation or law enforcement. Extended exposure to such material can lead to severe psychological issues, including stress, anxiety, and PTSD.

One notable example is the lawsuit filed by Facebook’s content moderators, who suffered trauma from prolonged exposure to graphic content without adequate psychological support. Similar risks exist across various annotation domains where human workers must handle sensitive data without the option to decline specific projects.

Without mental health resources or counseling, annotators risk long-term emotional harm – a hidden cost behind many AI systems.

Improving working conditions for data labelers

Improving the well-being of data annotators begins with fair compensation. Companies must move beyond the “low-cost labor” mindset and pay wages that reflect the focus, skill, and time the work demands. Competitive pay not only supports workers but also ensures higher-quality data.

Equally vital is the regulation of work hours. Since annotation tasks require sustained attention, overextending workers leads to burnout and reduced accuracy. Encouraging shorter shifts, regular breaks, and flexible schedules helps maintain both productivity and mental health.

In addition, offering mental health programs, counseling access, and stress management support can significantly improve job satisfaction. Some companies, including Coral Mountain, are now integrating wellness initiatives into their operations, recognizing that the quality of human input directly affects AI output.

Protection against psychological impact

For tasks involving disturbing or explicit content, safeguards must be implemented. Sensitive data should be handled by trained or voluntarily participating annotators, and automatic filtering systems should minimize unnecessary human exposure.

Major technology platforms like Meta and YouTube already use machine learning to pre-filter harmful content before human review. When exposure is unavoidable, frequent rotation, peer support systems, and trauma-informed counseling should be mandatory.

The Facebook moderator case demonstrated the urgent need for such measures – setting a precedent for mental health protection in data-driven industries.

Escaping the race to the bottom

In the data annotation market, vendors often compete primarily on cost and delivery speed. This has led to a “race to the bottom,” where low prices come at the expense of ethical treatment and data quality.

Organizations can counter this by using structured evaluation frameworks that assess vendors based not just on price but also on fairness, quality standards, and worker well-being. Coral Mountain advocates for transparent vendor evaluation systems that prioritize ethical practices and sustainable data quality.

Legislation and industry standards

Despite the growing number of data annotators worldwide, the field remains largely unregulated. Governments and industry groups must establish clear labor standards, mental health protections, and ethical guidelines.

Initiatives such as the European Commission’s AI Ethics Guidelines and efforts by the Partnership on AI are paving the way for more humane working environments. However, as AI evolves, these frameworks must continue to adapt and expand.

Conclusion

Data annotators are the unseen foundation of the AI revolution. Their meticulous work powers everything from self-driving cars to large language models – yet their contributions are often undervalued.

To truly advance AI ethically, we must ensure fair pay, humane conditions, and psychological safety for annotators worldwide. The future of AI depends not only on smarter machines but also on our ability to protect and value the humans who make them possible.

After all, technology should serve humanity – not the other way around.

Coral Mountain Data is a data annotation and data collection company that provides high-quality data annotation services for Artificial Intelligence (AI) and Machine Learning (ML) models, ensuring reliable input datasets. Our annotation solutions include LiDAR point cloud data, enhancing the performance of AI and ML models. Coral Mountain Data provide high-quality data about coral reefs including sounds of coral reefs, marine life, waves….

Recommended for you

- News

Most remote work today faces the same underlying economic pressure: commoditization driven by automation and global...

- News

Explore how AVs learn to see: Key labeling techniques, QA workflows, and tools that ensure safe...

- News

How multi-annotator validation improves label accuracy, reduces bias, and helps build reliable AI training datasets at...

Coral Mountain Data

Office

- Group 3, Cua Lap, Duong To, Phu Quoc, Kien Giang, Vietnam

- (+84) 39 652 6078

- info@coralmountaindata.com

Data Factory

- An Thoi, Phu Quoc, Vietnam

- Vung Bau, Phu Quoc, Vietnam

Copyright © 2024 Coral Mountain Data. All rights reserved.