Outsourcing data annotation is a strategic decision that many organizations face today. While some prefer to keep the process in-house to maintain control, others turn to specialized service providers to handle the growing demands of AI and ML training data. The question remains: is outsourcing worth the cost? Let’s take a closer look at the challenges of managing annotation internally and the potential benefits of outsourcing.

Should you outsource data annotation?

Data annotation might sound simple on the surface—adding labels to datasets—but in practice, it involves multiple layers of complexity. Companies must balance speed, accuracy, and scalability, all while ensuring compliance with data security standards.

Internal annotation teams often struggle with issues like workforce management, inconsistent quality, or inadequate tooling. These bottlenecks can delay projects, increase costs, and ultimately affect the performance of AI models. Outsourcing, when done with the right partner, can address many of these challenges.

Managing a large labeling workforce

Training modern AI systems requires massive datasets—sometimes millions of annotations across text, images, audio, and video. For an organization to handle this internally, it must recruit, train, and manage a large workforce dedicated solely to labeling.

This comes with several challenges:

- High overhead costs – salaries, training, and infrastructure.

- Management complexity – monitoring productivity, maintaining consistency, and handling turnover.

- Distraction from core business – teams that should focus on product development or research get tied up in annotation operations.

Unless data annotation is a core business activity, running such a workforce often proves inefficient and unsustainable in the long run.

Lack of access to proper tools and software

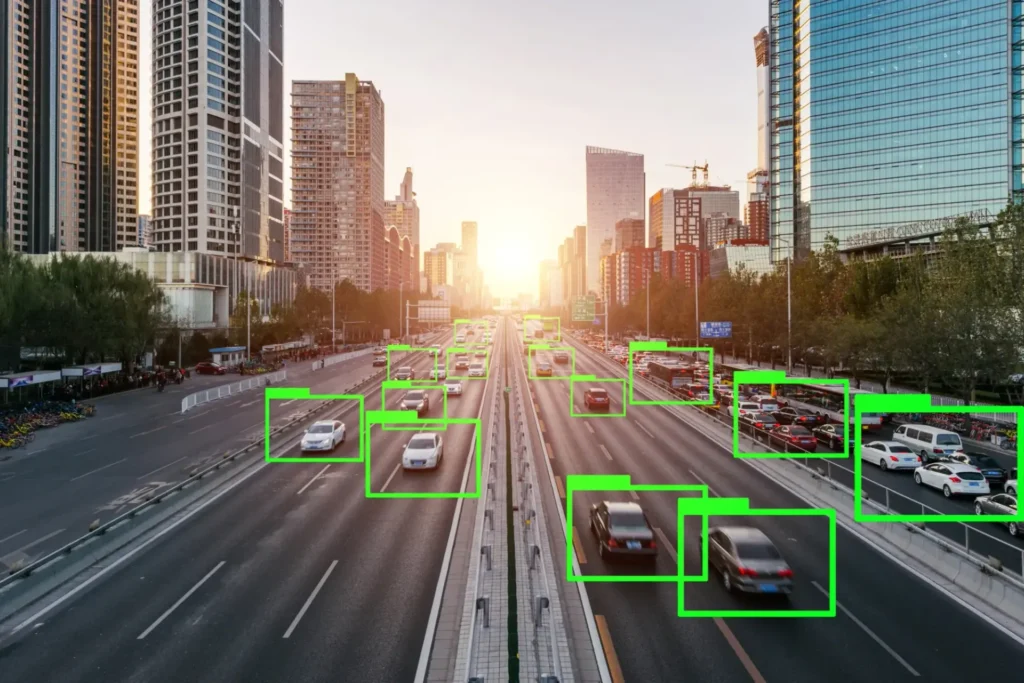

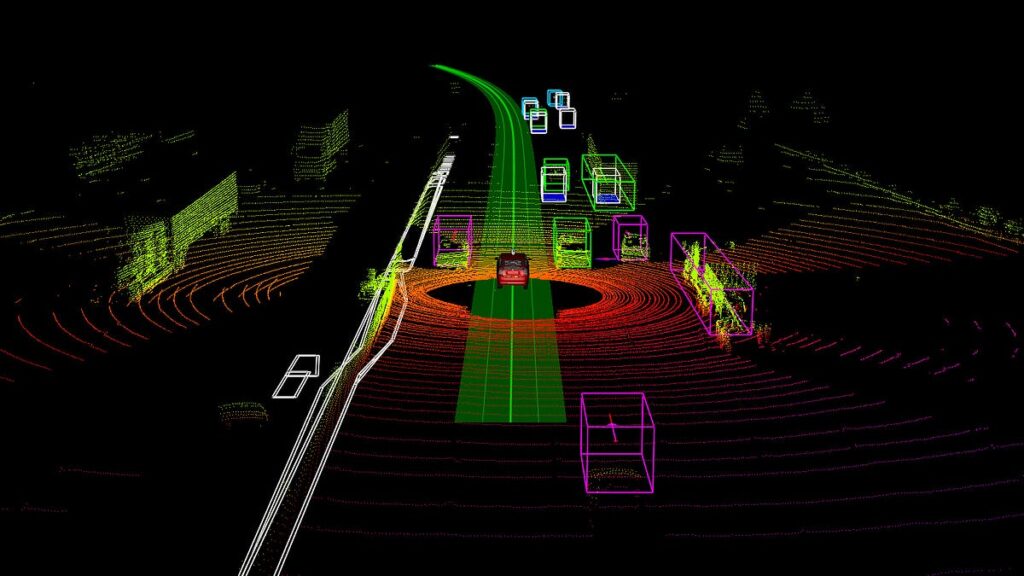

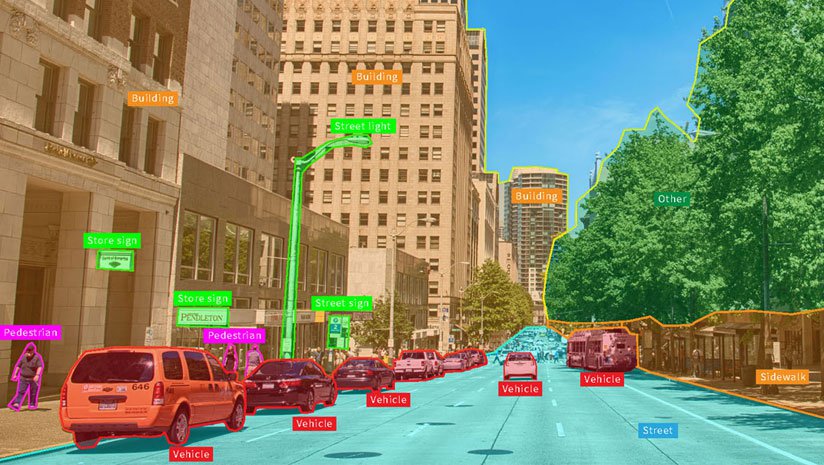

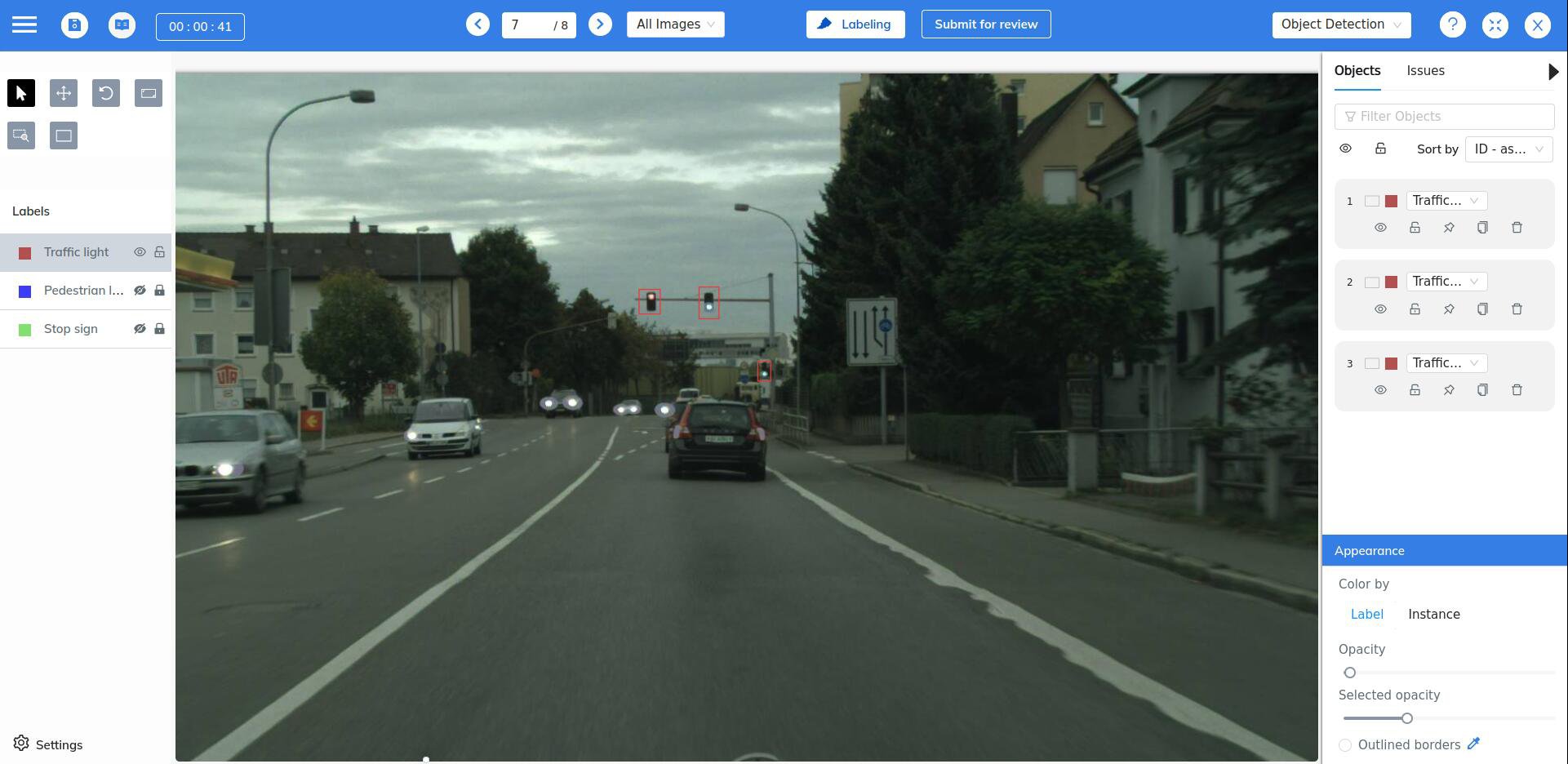

Manpower alone does not guarantee success. Annotation requires specialized tools—bounding boxes, semantic segmentation, 3D point cloud labeling, audio transcription, and more.

Developing such tools in-house is not only expensive but also time-consuming. Maintaining them to keep up with evolving AI requirements is an additional burden.

On the other hand, outsourcing providers invest heavily in developing or licensing advanced platforms designed for scale. These platforms often include features like:

- Built-in quality control and validation checks.

- Semi-automated labeling powered by AI.

- Collaboration and workflow management systems.

Free or open-source tools may suffice for small experiments, but they fall short when handling enterprise-scale datasets, especially for complex modalities like LiDAR or multi-sensor fusion.

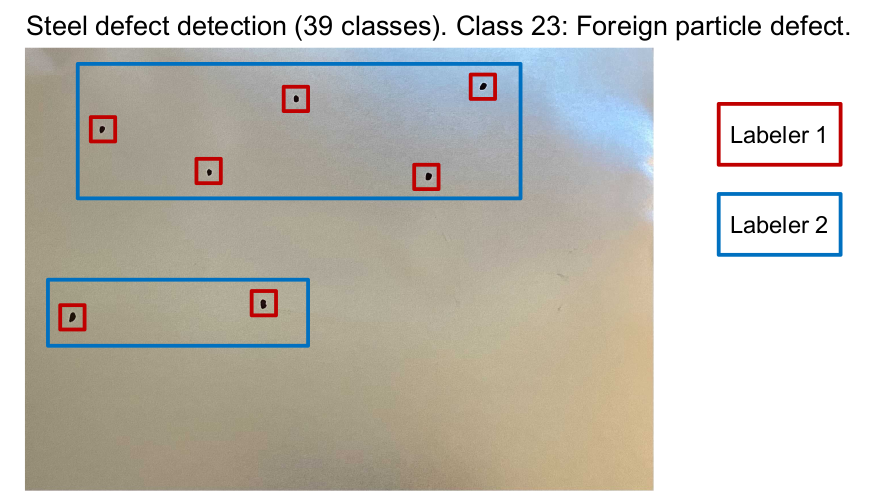

Difficulty in producing consistently high-quality labels

Quality is the cornerstone of AI training. Even small labeling errors can cascade into significant performance issues for models. Inconsistent data, mislabeled categories, or overlooked details can lead to reduced accuracy, biased outputs, or even system failures.

The most common issues include:

- Human error – unavoidable without strong validation systems.

- Inconsistent labeling – different annotators may interpret instructions differently.

- Misunderstood guidelines – unclear instructions lead to wasted time and rework.

Maintaining quality in-house requires a robust review system, multiple layers of validation, and constant feedback loops—all of which are resource-intensive.

Can outsourcing help?

Maintain quality across the dataset

Professional outsourcing firms employ annotators who are trained specifically for large-scale projects. They are equipped with advanced tools and workflows designed to catch errors early.

For example, at Mindkosh, our custom-built annotation tool integrates real-time error detection, annotation validation, and semi-automated AI assistance. This ensures annotations remain consistent across thousands of annotators while significantly reducing manual errors.

The result: datasets that are cleaner, more accurate, and more reliable for model training.

Scale up and down easily

AI projects are rarely consistent in demand. Some phases require millions of annotations, while others only need small batches. Maintaining a large in-house team during slow periods wastes resources, but scaling down can be disruptive.

Outsourcing solves this by offering on-demand scalability. Providers can quickly allocate more annotators when demand spikes and scale back without affecting delivery timelines. This flexibility allows companies to stay agile and cost-efficient.

Meet deadlines with confidence

Internal teams often operate within standard office hours and face delays from training, onboarding, and process setup. This slows project delivery, especially for global companies working across time zones.

Outsourcing partners, by contrast, run optimized workflows with dedicated teams that can work extended hours or even around the clock. This accelerates project timelines and ensures deadlines are met—sometimes shaving weeks or months off completion schedules.

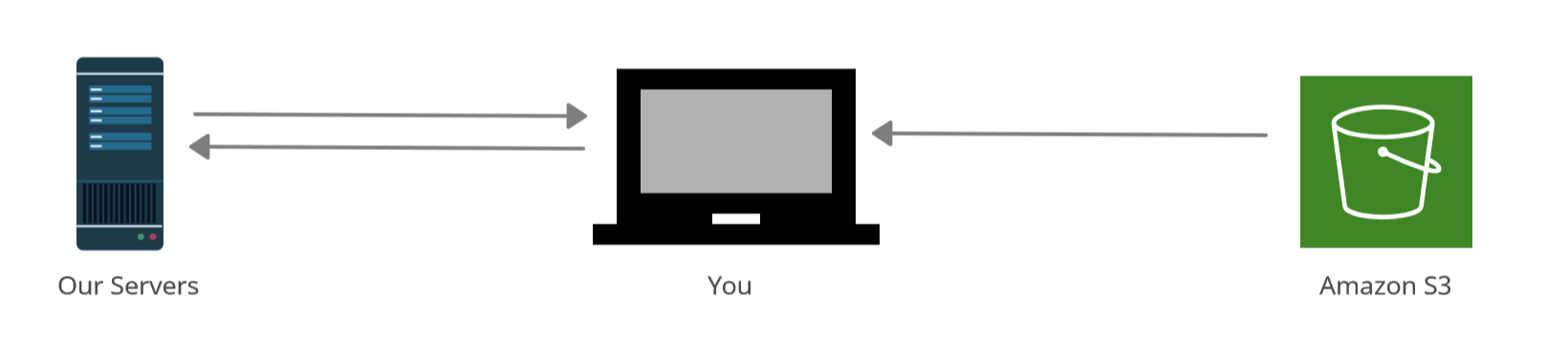

Is my data secure if I outsource?

Data security is often the biggest concern when considering outsourcing. Sensitive datasets—such as medical records, financial transactions, or proprietary business data—require strict protection.

At Mindkosh, security is built into every layer of our operations:

- Secure infrastructure – all data is stored on encrypted AWS S3 buckets with regular backups.

- Controlled access – labeling centers use security cameras, and annotators are restricted from carrying phones or storage devices.

- Direct streaming – clients can stream data directly from their own S3 accounts so data never leaves their environment.

- On-premise deployment – for clients with strict compliance needs, our tools can be deployed directly within their infrastructure.

These measures ensure that outsourcing does not compromise data confidentiality.

Final thought: Garbage in, garbage out

The saying holds especially true for AI: the performance of a model is only as good as the quality of the data it learns from. Poorly annotated data leads to unreliable models, no matter how advanced the architecture.

Outsourcing data annotation is not simply about reducing costs—it is about gaining access to experienced teams, advanced tools, and scalable processes that ensure high-quality datasets. For most organizations, this approach not only improves model accuracy but also frees internal teams to focus on innovation and core business goals.

Coral Mountain Data is a data annotation and data collection company that provides high-quality data annotation services for Artificial Intelligence (AI) and Machine Learning (ML) models, ensuring reliable input datasets. Our annotation solutions include LiDAR point cloud data, enhancing the performance of AI and ML models. Coral Mountain Data provide high-quality data about coral reefs including sounds of coral reefs, marine life, waves….

Recommended for you

- News

Explore how AVs learn to see: Key labeling techniques, QA workflows, and tools that ensure safe...

- News

How multi-annotator validation improves label accuracy, reduces bias, and helps build reliable AI training datasets at...

- News

Discover the world of point cloud object detection. Learn about techniques, challenges, and real-world applications. Introduction...

Coral Mountain Data

Office

- Group 3, Cua Lap, Duong To, Phu Quoc, Kien Giang, Vietnam

- (+84) 39 652 6078

- info@coralmountaindata.com

Data Factory

- An Thoi, Phu Quoc, Vietnam

- Vung Bau, Phu Quoc, Vietnam

Copyright © 2024 Coral Mountain Data. All rights reserved.