Fusion of lidar data with cameras provides complementary information and enables powerful detection capabilities. Let’s explore the various sensor fusion techniques, benefits, applications and more.

Individual sensors like Lidars and cameras deliver strong capabilities when used independently, but each also carries inherent limitations. In mission-critical applications such as autonomous vehicles and robotics-where safety and redundancy are non-negotiable-relying on a single sensing modality is not sufficient. This is where Sensor Fusion proves essential.

By combining data from multiple sensors with complementary strengths, sensor fusion enables systems to form a more reliable and comprehensive understanding of the environment. In the sections below, we explore what sensor fusion is, why it matters, how it is implemented, and where it is used.

What is LIDAR?

Lidar (Light Detection and Ranging) is a remote sensing technology that measures distances by emitting laser pulses and analyzing the reflected signals. It creates dense 3D point clouds that capture object geometry and depth with high precision. Each point in the cloud represents a distance measurement to a surface in the environment.

Lidar sensors are available in various mechanical and solid-state configurations, offering trade-offs in range, resolution, cost, and field of view. Regardless of the form factor, their ability to deliver accurate depth perception makes them indispensable for perception tasks. (Learn more about how Lidar works here.)

Advantages of Lidar over Cameras

Although Lidar and cameras complement one another, Lidar offers several major advantages in specific operational scenarios:

Depth Perception

Lidar directly measures distance with centimeter-level accuracy, allowing precise 3D reconstruction. Cameras require stereo algorithms or disparity estimation to infer depth, which introduces uncertainty and limitations-especially at long ranges or in textureless areas.

Performance in Low Light

Lidar performance is largely independent of lighting conditions. It operates effectively in complete darkness, whereas cameras degrade significantly in dim or backlit environments.

Precision and Accuracy

Because Lidar detects objects in 3D space, there is no need for complex post-processing to infer size or position. Object boundaries and dimensions can be determined with high confidence.

Resistance to Visual Obstructions

Lidar can partially penetrate visual impediments such as dust, fog, or light rain. Cameras typically struggle in these situations due to contrast loss or glare.

Wide Field of View

Many Lidar units provide full 360° scanning, enabling continuous environmental monitoring. Achieving equivalent coverage with cameras requires multiple devices and complex stitching logic.

What is Lidar Sensor Fusion?

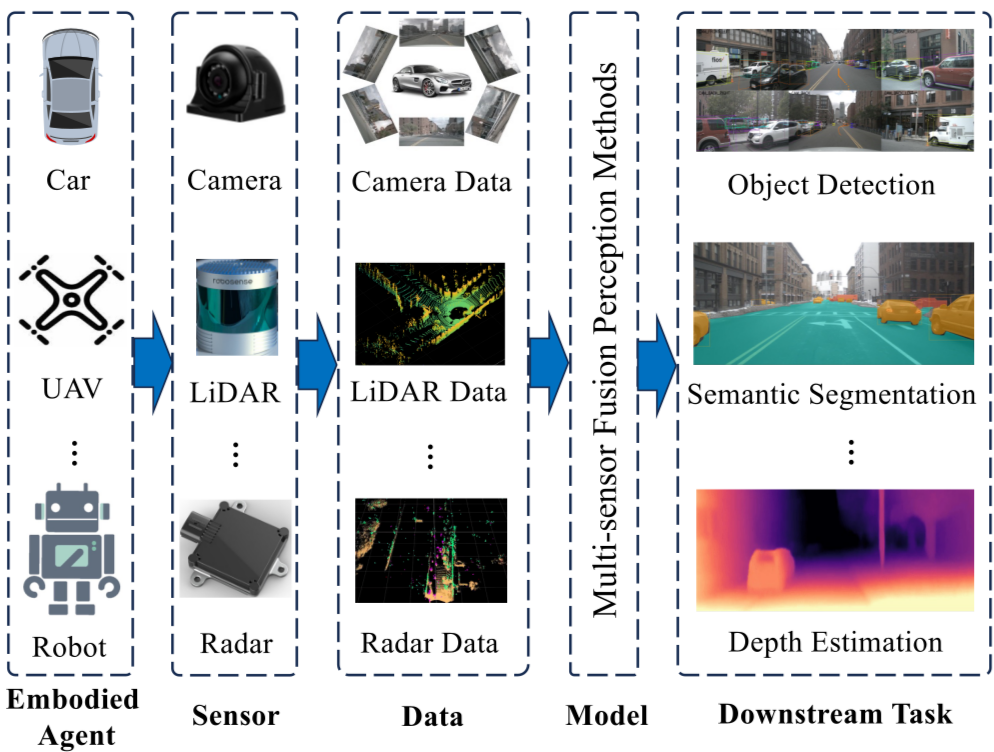

Lidar sensor fusion is the process of integrating Lidar outputs with data from other sensing modalities-commonly cameras or RADAR-to build a more accurate and redundant perception system.

Instead of depending on a single sensor’s strengths, fusion allows multiple inputs to collaborate. For example, Lidar may provide precise distance measurements, while cameras offer rich semantic context such as color or texture. When combined, the system becomes both more resilient and intelligent.

Key Advantages of Lidar Sensor Fusion

Redundancy

If one sensor temporarily fails or becomes unreliable, others can compensate. For instance, cameras may struggle in low light, while Lidar remains unaffected.

Certainty

Overlapping detection from multiple sensors allows confidence scoring and error minimization through cross-verification.

Contextual Understanding

Fusing geometric data from Lidar with visual cues from cameras results in superior scene interpretation-identifying not just where an object is but also what it is.

How Does Lidar Sensor Fusion Work?

Sensor fusion techniques are generally categorized into three types based on when data is combined during the processing pipeline:

- Early Fusion

- Mid-Level Fusion

- Late Fusion

Early Lidar Sensor Fusion

In early fusion, raw sensor data-such as point clouds and camera images-is merged before feature extraction.

Typical Pipeline:

- Point Cloud Projection:

Lidar points are projected into the camera image space using known extrinsic (sensor position/orientation) and intrinsic (camera lens model) parameters. - Feature Extraction:

Features such as corners and edges are identified independently within each sensor’s raw data. - Data Association:

Matching features from both modalities creates a unified dataset containing both geometric and visual attributes.

Benefits:

- Produces rich, multimodal data early in the pipeline

- Enables highly accurate object recognition

Challenges:

- Computationally intensive

- Sensitive to calibration errors

- Limited tooling and literature compared to other fusion types

Late Lidar Sensor Fusion

Late fusion combines high-level predictions from individual sensors rather than raw data.

Process Summary:

- Independent Detection:

Each sensor runs its own detection pipeline. - Result Matching:

Final outputs are merged based on criteria like IoU (Intersection over Union) or tracking consistency.

Benefits:

- Easier to implement and debug

- Less demanding in terms of compute resources

Challenges:

- Risk of mismatching detections

- Requires robust association logic

Mid-Level Lidar Sensor Fusion

Mid-level fusion strikes a balance by combining intermediate representations rather than raw data or final predictions.

Process Outline:

- Each sensor extracts meaningful features independently (e.g., edges from Lidar, textures from cameras).

- These features are transformed into a common reference frame.

- Machine learning models match and merge them into a unified understanding.

This approach offers strong adaptability in dynamic environments and tends to be more efficient than early fusion while being more informative than late fusion.

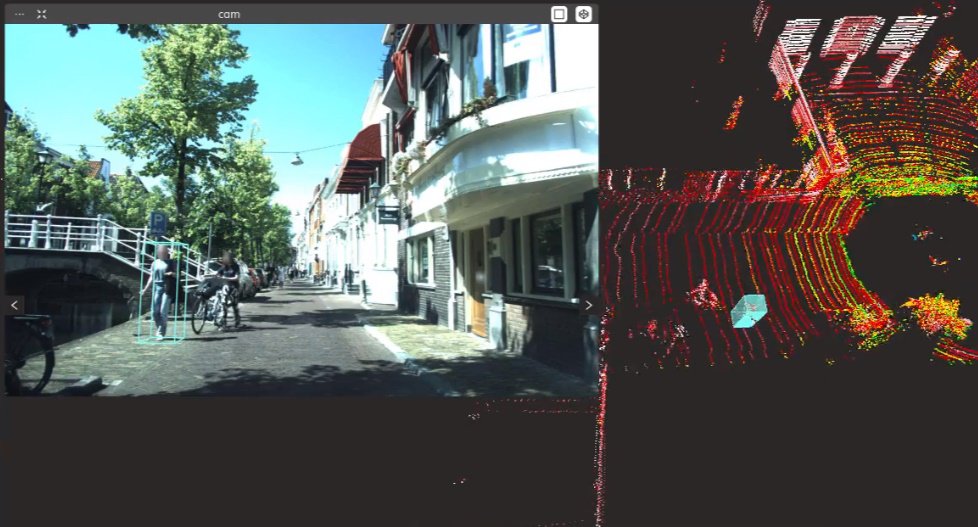

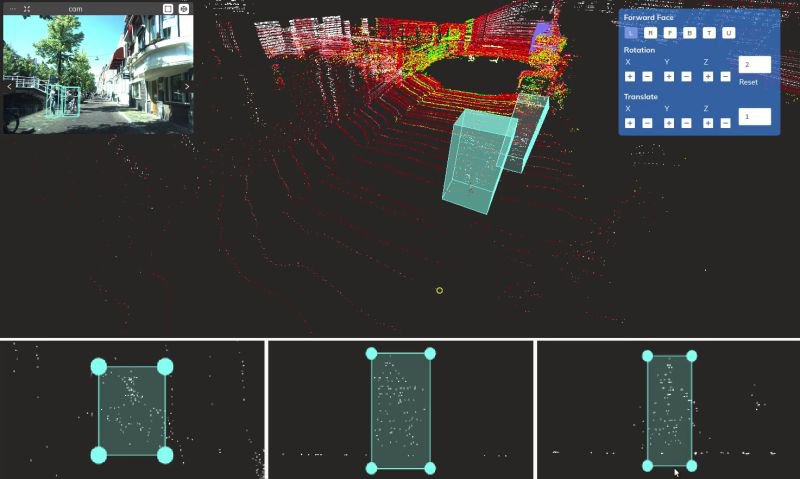

Projecting Lidar Points onto Images

Projection is critical across early and mid-level fusion. The process involves:

- Lidar and Camera Synchronization

Sensors capture data at different frame rates (e.g., 15 Hz Lidar vs. 20 FPS camera). Frame timestamps are matched to align corresponding samples. Without synchronization, moving platforms (like cars or robots) introduce environmental inconsistencies.

- Point Projection

- Step 1: Calibration

Determine the relative position and rotation between sensors (extrinsic parameters). - Step 2: Transformation and Projection

Convert Lidar coordinates into the camera frame and map them using the camera’s intrinsic matrix.

This results in depth-aware overlays of point clouds onto images-useful for both visualization and annotation.

Annotating Data for Sensor Fusion

Labeling fused datasets is significantly more complex than annotating individual images or point clouds. Sparse depth data makes contextual cues from camera projections essential.

A suitable annotation platform must:

- Display synchronized multi-sensor data in one interface

- Allow projection of 3D annotations to 2D image planes

- Maintain consistent instance tracking across time and sensors

The Coral Mountain annotation platform supports these capabilities and is designed from the ground up for high-precision fusion workflows.

Industrial Applications of Lidar Sensor Fusion

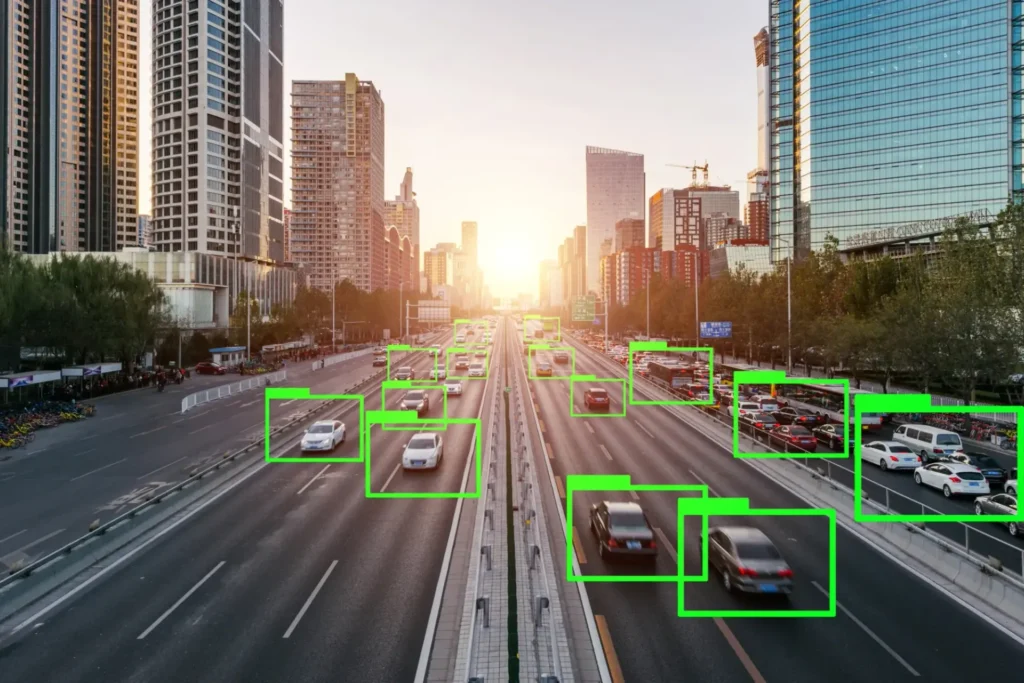

Autonomous Vehicles

- Enhances detection and tracking reliability

- Eliminates blind spots through multi-sensor redundancy

Robotics

- Enables safe navigation and obstacle avoidance

- Facilitates 3D mapping and structured manipulation

Augmented Reality (AR)

- Improves depth estimation for object placement

- Enhances spatial awareness for interactive overlays

Summary

What is Lidar Sensor Fusion?

The combination of Lidar data with complementary sensors like cameras and RADAR to create a unified environmental model.

Why is it essential?

It improves accuracy, redundancy, and robustness under real-world variances.

How is it done?

Through early, mid-level, or late fusion architectures-selected based on performance and application constraints.

Key Benefits:

- Precision

- Redundancy

- Holistic Scene Interpretation

Where is it used?

Autonomous vehicles, robots, AR systems, and any domain where spatial awareness is mission-critical.

Coral Mountain Data is a data annotation and data collection company that provides high-quality data annotation services for Artificial Intelligence (AI) and Machine Learning (ML) models, ensuring reliable input datasets. Our annotation solutions include LiDAR point cloud data, enhancing the performance of AI and ML models. Coral Mountain Data provide high-quality data about coral reefs including sounds of coral reefs, marine life, waves….

Recommended for you

- News

Most remote work today faces the same underlying economic pressure: commoditization driven by automation and global...

- News

Explore how AVs learn to see: Key labeling techniques, QA workflows, and tools that ensure safe...

- News

How multi-annotator validation improves label accuracy, reduces bias, and helps build reliable AI training datasets at...

Coral Mountain Data

Office

- Group 3, Cua Lap, Duong To, Phu Quoc, Kien Giang, Vietnam

- (+84) 39 652 6078

- info@coralmountaindata.com

Data Factory

- An Thoi, Phu Quoc, Vietnam

- Vung Bau, Phu Quoc, Vietnam

Copyright © 2024 Coral Mountain Data. All rights reserved.